Data Engineering: Architecture, Tools, Use Cases & Career Guide (2026)

A complete, practical guide to understanding modern data engineering systems.

Data Engineering is the backbone of modern analytics, AI, and digital products. This guide is designed for developers, data professionals, architects, and business leaders who want to understand how data systems are designed, scaled, and optimized in real-world environments.

From raw data ingestion to analytics-ready datasets, data engineering solves critical problems such as data reliability, scalability, governance, and real-time processing. This pillar guide establishes Innotechify’s authority by breaking down complex concepts into clear, actionable insights aligned with modern industry practices.

What Is Data Engineering?

Data engineering is the practice of designing, building, and maintaining systems that collect, store, process, and deliver data for analytics, reporting, and machine learning.

- Data pipelines, not dashboards

- Scalability, not just insights

- Reliability and performance

A strong data engineering foundation enables faster decision-making, accurate analytics, scalable AI models, and regulatory compliance.

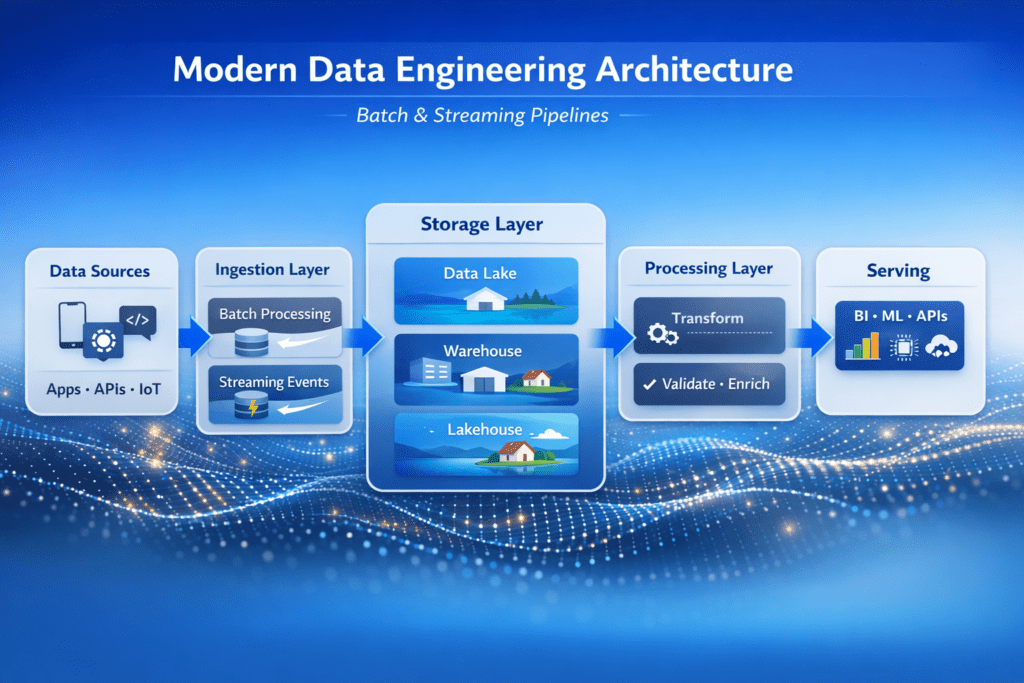

Modern Data Engineering Architecture

- 1. Data Sources (Apps, APIs, IoT)

- 2. Ingestion Layer (Batch & Streaming)

- 3. Storage Layer (Lake, Warehouse, Lakehouse)

- 4. Processing Layer

- 5. Serving Layer

Data Engineering Tools & Technologies

- Ingestion: Kafka, Airbyte, Fivetran

- Storage: S3, BigQuery, Snowflake

- Processing: Spark, Flink, dbt

- Orchestration: Airflow, Prefect

- Cloud: AWS, Azure, GCP

Real-World Data Engineering Use Cases

- Fintech – Fraud detection

- Retail – Customer analytics

- SaaS – Product telemetry

- Healthcare – Patient data pipelines

Common Challenges in Data Engineering

- Data quality & consistency

- Scalability & performance

- Cost optimization

- Governance & compliance

Future of Data Engineering (2026 & Beyond)

- Lakehouse architecture

- Streaming-first pipelines

- AI-assisted data workflows

- Data products mindset

Frequently Asked Questions

Is data engineering hard?

It requires strong fundamentals but is highly learnable.

Do data engineers need coding?

Yes, Python and SQL are essential.

What tools should I learn first?

SQL, Python, cloud storage, and orchestration tools.

Blogs

Data Lake Architecture Best Practices

# Data Lake Architecture Best Practices A data lake is a centralized repository that allows you to store all your structured and…

Building Real-Time Data Pipelines with Apache Kafka

# Introduction Apache Kafka has become the de facto standard for building real-time data pipelines in modern data architectures. Its distributed, fault-tolerant…

Stay Ahead in Data Engineering

Subscribe to Innotechify for weekly insights on data, cloud & AI.